Abstract

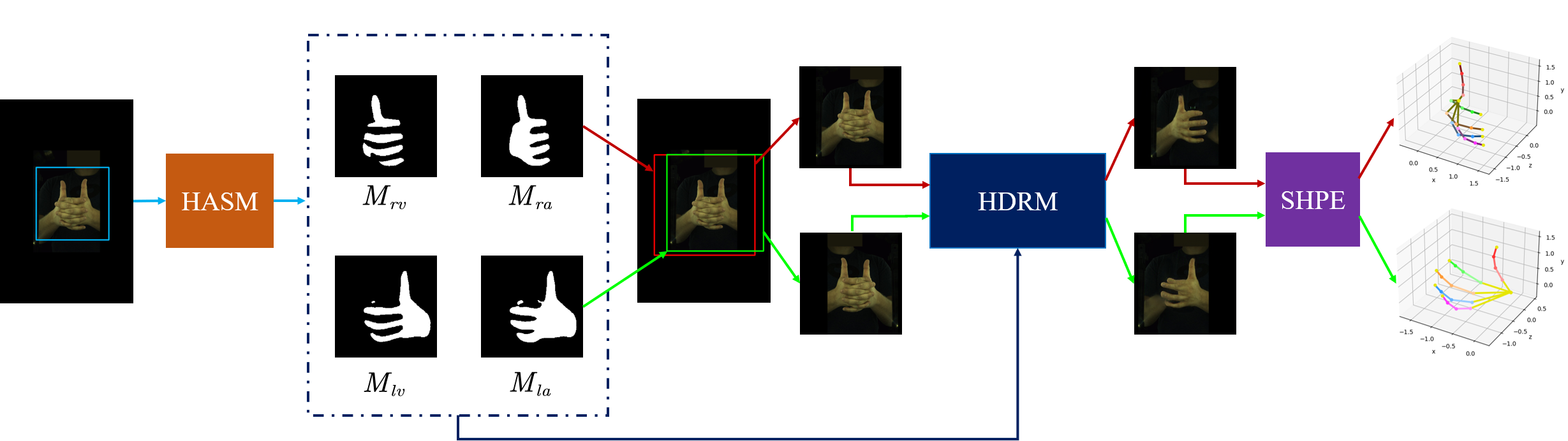

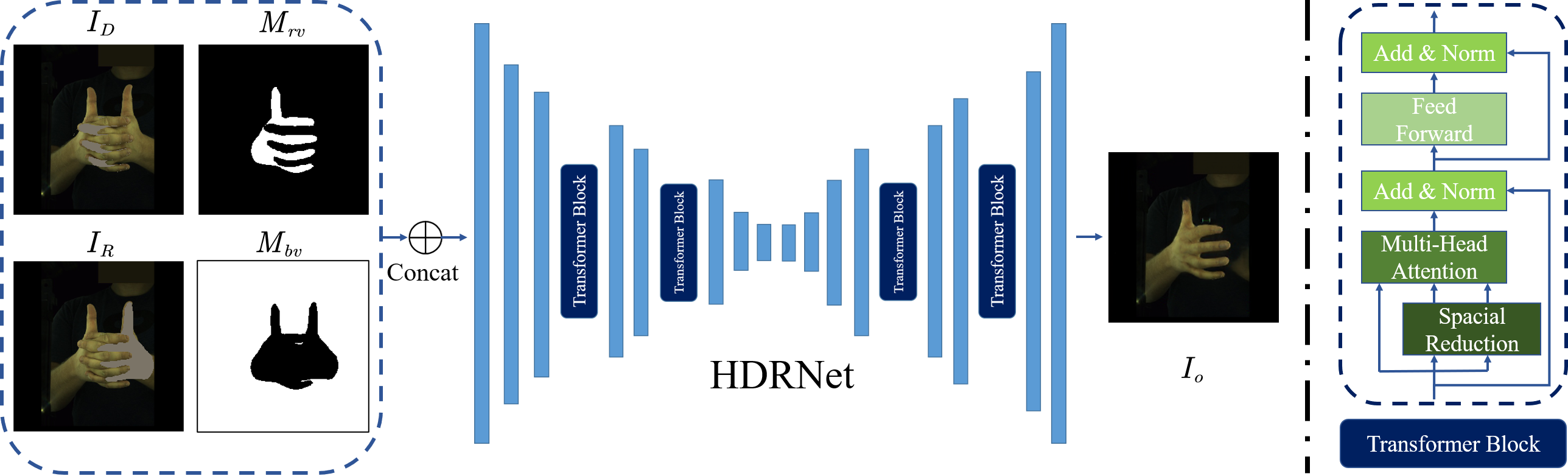

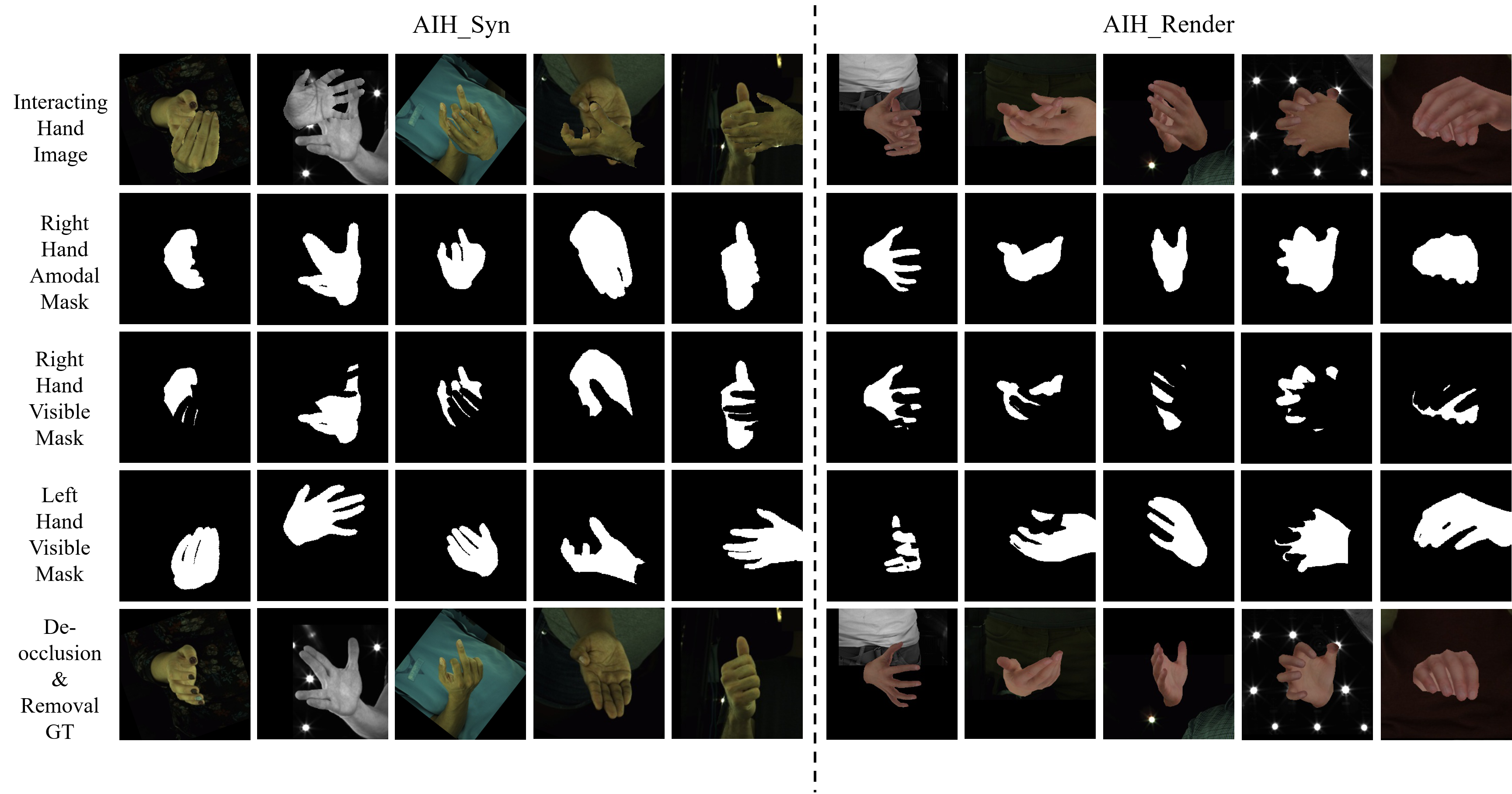

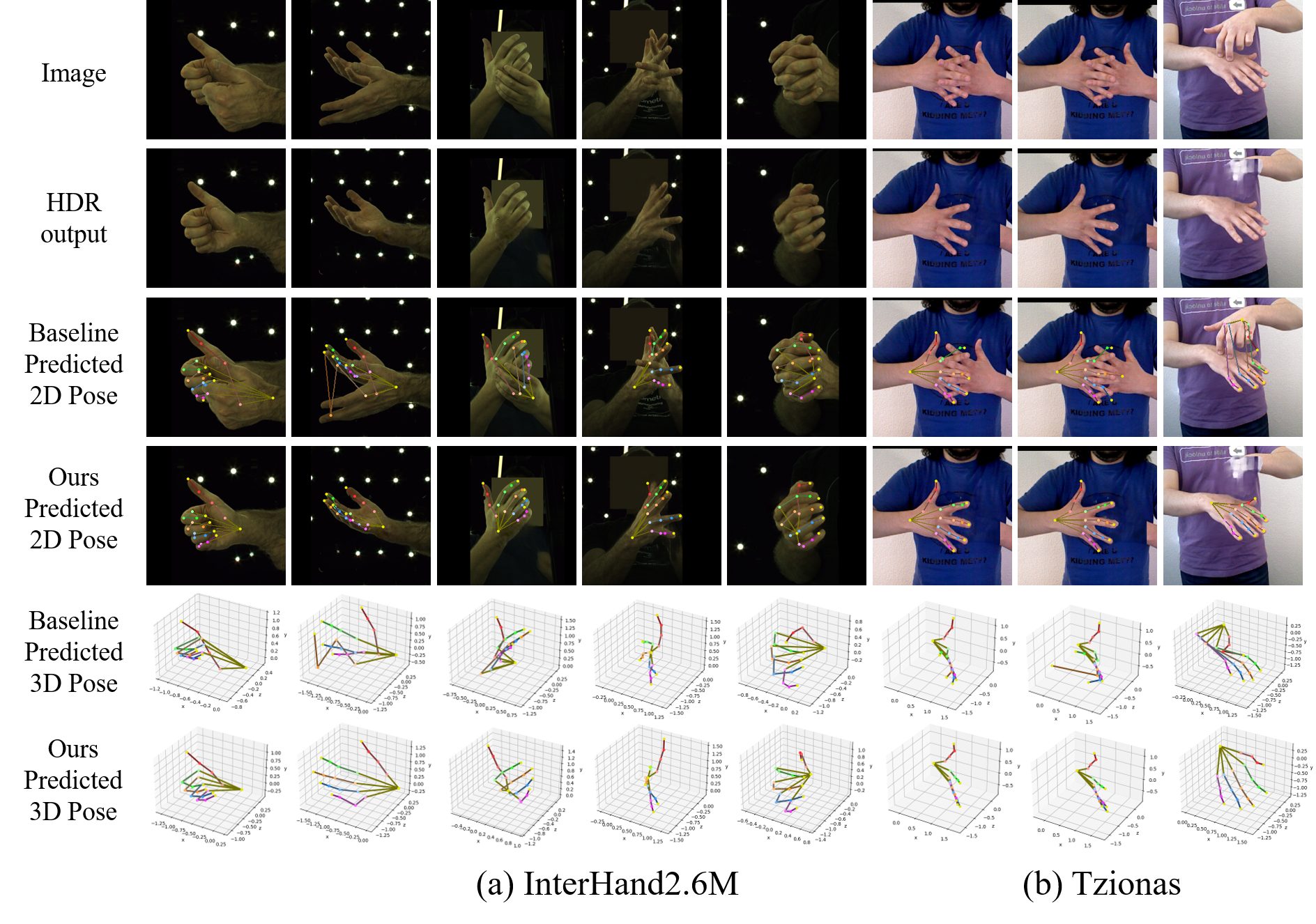

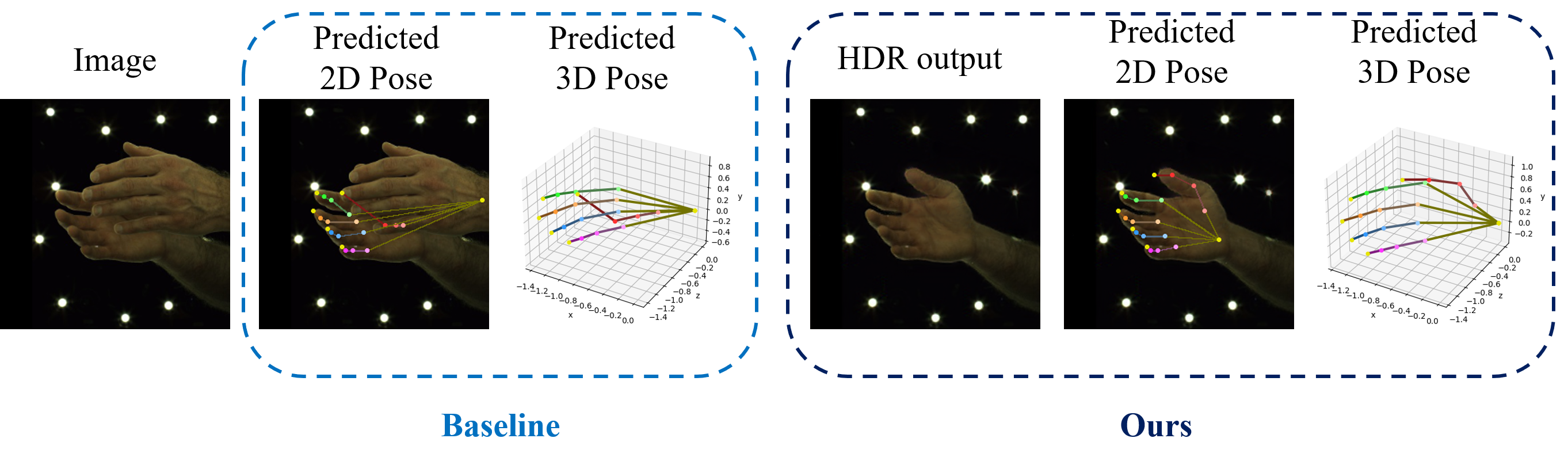

Estimating 3D interacting hand pose from a single RGB image is essential for understanding human actions. Unlike most previous works that directly predict the 3D poses of two interacting hands simultaneously, we propose to decompose the challenging interacting hand pose estimation task and estimate the pose of each hand separately. In this way, it is straightforward to take advantage of the latest research progress on the single-hand pose estimation system. However, hand pose estimation in interacting scenarios is very challenging, due to (1) severe hand-hand occlusion and (2) ambiguity caused by the homogeneous appearance of hands. To tackle these two challenges, we propose a novel Hand De-occlusion and Removal (HDR) framework to perform hand de-occlusion and distractor removal. We also propose the first large-scale synthetic amodal hand dataset, termed Amodal InterHand Dataset (AIH), to facilitate model training and promote the development of the related research. Experiments show that the proposed method significantly outperforms previous state-of-the-art interacting hand pose estimation approaches.